Back in 1992 a team from Boston Consulting Group set out to establish if they could determine what factors could be used to indicate the success or failure of change management programs in general[ sirkin2005hard ]. They concluded that four “hard factors” were highly effective at predicting the outcome of the program. These hard factors were:

- The Duration of the program, and specifically the amount of time between reviews of progress. Question: How often do formal project reviews take place? Score: 1 for less than two months, 2 for 2-4 months, 3 for 4-8 months and 4 if more than 8 months apart.

- The Integrity of effectiveness of the individual or team leading the change which includes aspects of skills, motivation and time dedicated to the task. Question: How capable is the Leader and how strong are the team’s skills and motivation? Do they all have enough time to dedicate to the change initiative? Score: 1 if all these criteria are met and 4 if the team are lacking in all areas. Score 2 or 3 for capabilities in between.

- The Commitment of two groups to the project: the senior management, C1, and the local employees, C2, affected by the change. Question 1: How often, consistently and urgently do Senior Managers communicate the need for change and have they allocated enough resources to the program. Score 1 for clear commitment, 2 or 3 for neutral approach and 4 for resistance or reluctance. Question 2: Do the employees involved in the change understand why it is happening and are they enthusiastic and supportive or anxious and resisting? Score 1 for eager adoption of the change, 2 for just willing or neutral, and3 or 4 for resisting change based on the level of reluctance.

- The Effort required from employees over and above their existing workload. Question: How much extra effort must employees make to deliver the change and does this come on top of a heavy workload? Score: 1 if less than 10% incremental effort is required, 2 for 10 to 20%, 3 for 20 to 40% and 4 if over 40%.

These four hard measures can be easily recalled as they form the word DICE.

The Boson Consulting team then scored 225 change management initiatives in a range of organisations using the questions set out above. By carrying out regression analysis on this data they were able to determine the relative importance of each factor in terms of the impact on the success of the program. They concluded that Integrity, I, and management commitment C1 where particularly strong and so their scores should be doubles when calculating a total DICE score, which is expressed as:

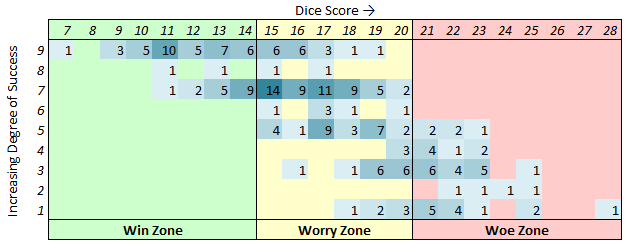

Since each value can be between 1 and 4, the best possible DICE score is 7 and the worst 28. The chart below plots the distribution of these 225 scores in relation to the degree of success of their change initiative. As can be seen, low scores correlate with successful outcomes, while high scores are associated with poor success.

Figure 62 The Boston Consulting analysis of 225 change management initiatives plotting the DICE scores in relation to the degree of success of the project. Numbers relate to the number of projects with scores rounded up to the nearest integer. Adapted by the author from The Hard Side of Change Management, Sirkin et al, Harvard Business Review 2005[ sirkin2005hard ].

The Boston Consulting team observed that projects with a score of 14 or less are likely to achieve the majority of their intended outcomes (the “Win Zone”), while those with a score of more than 20 were very unlikely to achieve the desired change (The “Woe Zone”). Score between 15 and 20 show a wide range of outcomes and so executives need to assess these projects carefully (the “Worry Zone”). Over time, the Boston Group have decreased the lower boundary of the Woe Zone to 18 points, providing an earlier indication that the project outcome is no longer predictable.

In their paper the authors of this scoring system provide some practical examples of its application and they make the observation that DICE scores can be applied to individual projects or initiatives within a larger program. Thus site participation in a resource efficiency program can be evaluated using this method as well as individual projects (involving technology as well as behaviour).

The beauty of this approach is that it is simple and focuses attention on many of the practical aspects of change that will influence the outcome of a resource efficiency program. The authors stress however that there are many “soft” aspects of the program, such as culture, communication and attitudes that are also important – it is just that these are not so consistently important or easy to measure. Another key benefit of this performance metric is that it is a Leading Indicator or a predictor of the program outcome, so can be applied early in a project to determine if corrective actions may be needed.

The resource efficiency Framework set out in my Book will lead to a low DICE score if properly implemented.

0 Comments